Computer science 101

Numbers and maths can make people in creative industries feel uncomfortable.

If you’ve ever considered yourself a left-brained ‘visual’ person, you’ve probably also thought of yourself as bad with numbers. You’d probably placed jobs and ideas that involve numbers and maths – like programming, accounting or physics – in the too-hard basket.

Over the last 12 months, I’ve come to a beautiful realisation that it doesn’t have to be the case. In fact, the power of programming is a serious weapon that’s the creative’s to own.

The advertising creative with an understanding of how computers work, beyond the superficial, holds an ace in the pack.

They have better ideas, they’re more hireable, they can execute ideas and apps and not be taken for a ride by shady developers. They are their own, and I shudder to use the word, 'creative technologists’. Best of all, they can measure the quality and impact of their creative work.

These are the home truths every creative – designer, art director, copywriter, creative director – should be aware of in 2017.

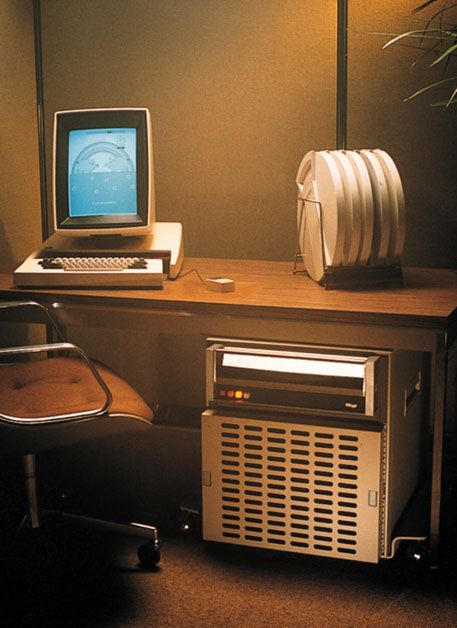

Firstly, know that history of computers is mindblowing.

You need to know, firstly, that computers aren’t some miracle that involved in the 70s and 80s, in the era of Apple and Microsoft.

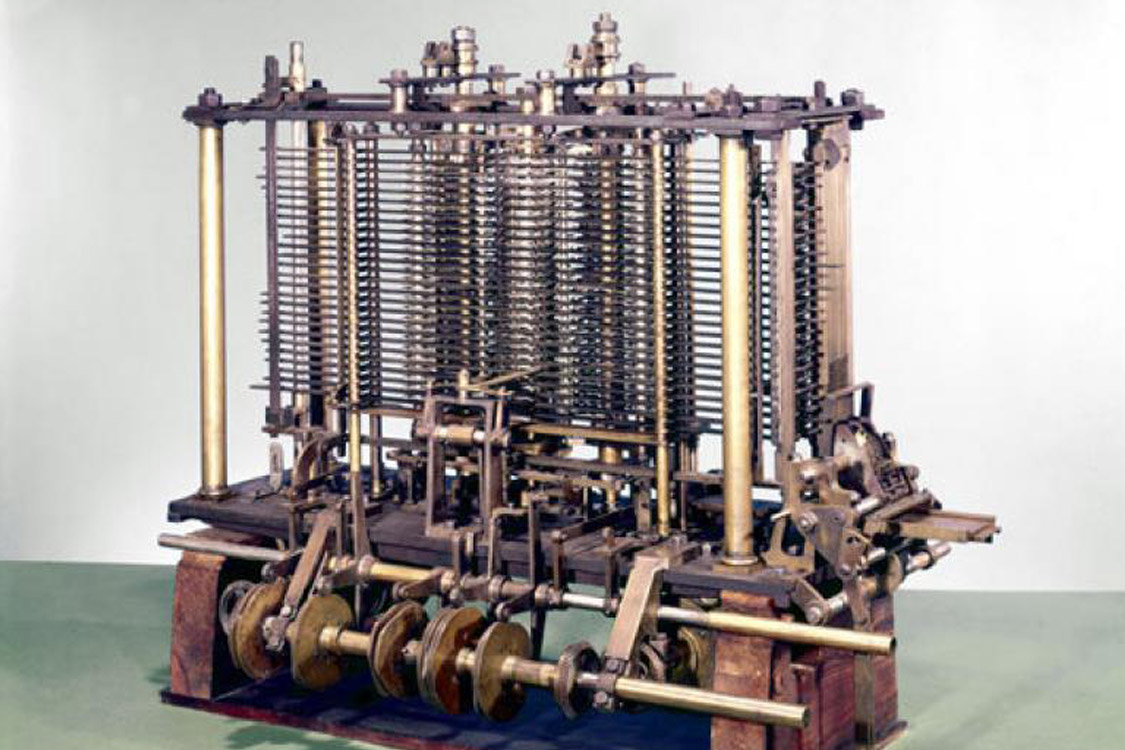

Their history is traced back to the mid-1800s, when they were merely thought experiments.

Mechanical adding machines (like giant, beefed up abacuses) were being built by pioneers like Charles Babbage. These performed singular tasks, like writing out all the prime numbers up to 10 million.

Then Ada Lovelace went one further. She theorised that any information – music, text, pictures, symbols etc. – could be expressed in digital form and manipulated by these machines. They just needed a way to be fed the information.

The answer was what we know as binary – the repetitious ones and zeros that computers can understand. It’s actually mindblowingly simple. An electrical current through a wire is a 1. Lack of current means a 0. Therefore, you can send a digital message in series of pulses, a bit like morse code. The letter X, for example, is written as 01011000 in computer-speak.

The HDMI cable plugged into your TV does exactly this – transfers 1s and 0s.

Once you put information into a computer, it needs to process it in a way that matches our brains; it needs to follow human logic. Everything we do, every decision we make, is an equation. If hungry, then I eat. Do I order French fries or mash with my steak? When full, stop eating.

It’s no surprise that words like ‘and', ‘or', ‘if', ‘when' and ‘then' are common in today’s programming languages. These are simply instructions to a computer of how to logically deal with problems. That’s the very essence of programming.

Writing a program merely tells your computer processor how to handle your binary data. This is where things get slightly harder, so I will break it down into a simple (though not entirely accurate) explanation.

The silicon chip in your machine contains lots of little scratches in its surface that work like little light switches, with an on and off.

Take two of these switches. If current is running through both of these switches and they both open, then the conditions for ‘and’ are met. One switch AND the other.

If current flows through either, and the switches still open, the conditions for ‘or’ are met. One switch OR the other.

Controlling these switches is the heart of programming. Writing code a machine can understand, to break down and process your data.

Understanding core principles like these has a drip-down effect on all your creative thinking.

Secondly, change the way you look at your computer.

I like to think of computers as being savant-level geniuses. Rain Man to the power of fifteen billion.

That little silver rectangle is actually just a brilliant, pasty-faced teen with Aspergers’.

Like a true savant, they lack the social awareness to understand grey areas. Therefore, any task you assign your computer has to be black and white, with no room for ambiguity. Pure instruction. It’s just the opposite of what flesh-and-bone creatives are paid to do.

So instead of treating your computer like your slave, think of them as your sidekick. Your own little R2D2.

The way most of us assign tasks is with graphical user interfaces. This allows us to assign tasks with inputs like mouse clicks, keyboard shortcuts. We can create data (words, images, audio etc) through these means. But working like this does not harness a computer’s full potential.

Instead, learn to put your little machine to work. Modify and manipulate existing data. There’s enough out there. Grab a microphone. Plug into one of the weather APIs freely available on the internet. Get a spreadsheet full of data from your client.

Once you have the data inputs, dream of ways to manipulate it. To distort it. To display it. On a projection wall. In music. It’s all possible.

Collect addresses of people using a web page and a form. Send them glitter.

Create amazing wall maps of famous cities, but instead of doing it in photoshop, write code that does it. These guys have.

Take mouse sketches, and compare them to known icons in real time. Google did.

Replication of the same task, over and over, is where your little box comes to the party. Taking input data, messing with them, then spitting out the results.

Execute variations of that code. Teach your little man to know what executions are good and what are bad. Boom, you’ve just created something that resembles artificial intelligence.

And the beauty? Write code once, execute a million times. Unlike agency employees, a computer won’t grumble.

Thirdly, know that technology people are different to ad people.

In ad land, possession is nine tenths of the law.

Ideas, or concepts, have owners – those who first thought of a campaign, poster or script. This is because we are a transient bunch, valuing awards and reputation as we swing from agency to agency. It makes sense to put your name on things.

In the land of computers, things are blissfully the opposite.

Ideas are not considered brain-farts of random genius. Ideas are built over decades, like scar tissue; one improvement laid upon another. In fact, they don’t even start out as fully-formed ideas.

This has been the norm for decades. The open source software movement is about giving away your code ... so the next poor sod who comes along doesn’t have to reinvent the wheel.

It means everyone from one man in Switzerland, Jurg Lehni, to Facebook are handing over the keys to their code for anyone to download on GitHub. That’s like David Droga opening up his bottom-drawer of scamps for the masses.

Evan Williams, founder of Twitter, is quoted saying “I didn’t invent Twitter ... People don’t invent things on the Internet. They simply expand on an idea that already exists.”

Sure, there are big personalities in tech (some that would make Bernbach and Ogilvy grovel) … Grove, Gates, Jobs, Andreessen, Bezos to name a few. But they do so on the shoulders of those before them. The many thousands of nameless employees who actually built the stuff, and the people before that, and those before that, and so on.

Fourth, know that perfectionism isn’t welcome here.

Perfectionism is why this article didn’t get posted for about two weeks. It’s an affliction I’d rather live without, but I’m managing it.

In the computer world, perfectionism will get a fool killed.

Painstaking obsession with detail is something that is applauded in agency life. Staying up till 2am kerning every headline in a pitch doc might be a labour of love, but doesn’t achieve anything meaningful.

The programmer who spends the same amount of time refactoring code, for no tangible gain, will be found out pretty quickly.

In technology, ideas, features and executions can be tested and measured. This is like kryptonite to today’s brilliant advertising creative. This is at odds with ‘the most effective ideas are also the most creative’, one of our favourite lines that gets trotted-out all the time.

The truth is, sometimes that's true, sometimes that's not.

Being accountable for results in the form of cold, hard numbers isn’t the death of creativity. It just means we’re more accountable for what works. It means trying things and moving on faster.

Unlike the world of big-budget advertising, the costs of fucking up in digital formats are next to zero. So why wait? Get stuck in.

Finally, relax knowing that computers won’t (knowingly) steal your job.

I previously wrote about robots coming to steal the ad creative’s job. It was a clickbait headline that would make a Buzzfeed ‘journo' proud.

The truth is, it’s unlikely we’ll be superseded for a long time.

Though we’ve briefly touched on a computer’s ability to deal with logical questions that are black and white, the grey area in between remains the domain of the human.

Our brain’s many impulses and analog waves combine to produce not just binary yes-no data but also answers such as “maybe” and “probably”

John Kelly, director of IBM research, thinks AI “isn’t about replacing human thinking with machine thinking. Rather, in the era of cognitive systems, humans and machines will collaborate to produce better results, each bringing their own superior skills to the partnership.”

With that established, we look forward to what a partnership of the creative and computer will bring.

In Walter Isaacson’s book of the history of computers, The Innovators, he reflects on the “first round of innovation” since the internet, which involved "pouring old wine—books, newspapers, opinion pieces, journals, songs, television shows, movies—into new digital bottles.”

With round one done, what kind of new wine will the creative create?

There’s never been a more exciting time.

Read this book for an appreciation of computers

The Innovators by Walter Isaacson. Couldn’t recommend enough.